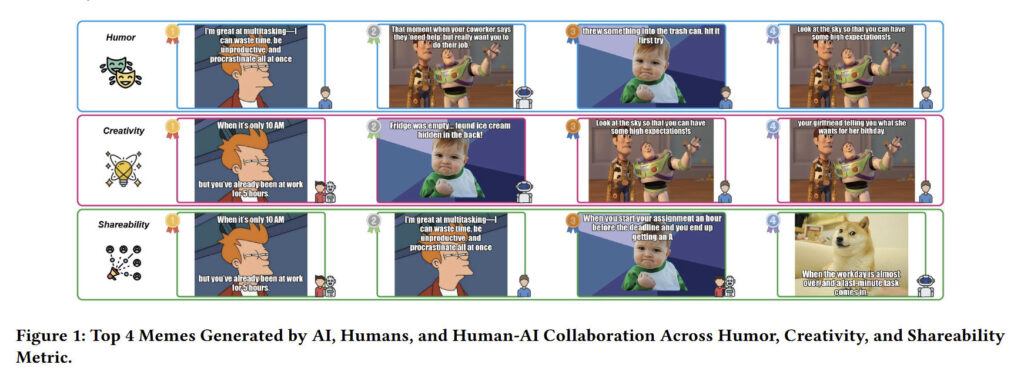

A new study examining meme creation found that AI-generated meme captions on existing famous meme images scored higher on average for humor, creativity, and "shareability" than those made by people. Even so, people still created the most exceptional individual examples.

The research, which will be presented at the 2025 International Conference on Intelligent User Interfaces, reveals a nuanced picture of how AI and humans perform differently in humor creation tasks. The results were surprising enough to have one expert declaring victory for the machines.

"I regret to announce that the meme Turing Test has been passed," wrote Wharton professor Ethan Mollick on Bluesky after reviewing the study results. Mollick studies AI academically, and he's referring to a famous test proposed by computing pioneer Alan Turing in 1950 that seeks to determine whether humans can distinguish between AI outputs and human-created content.

But maybe it's too soon to crown the robots. As the paper states, "While AI can boost productivity and create content that appeals to a broad audience, human creativity remains crucial for content that connects on a deeper level."

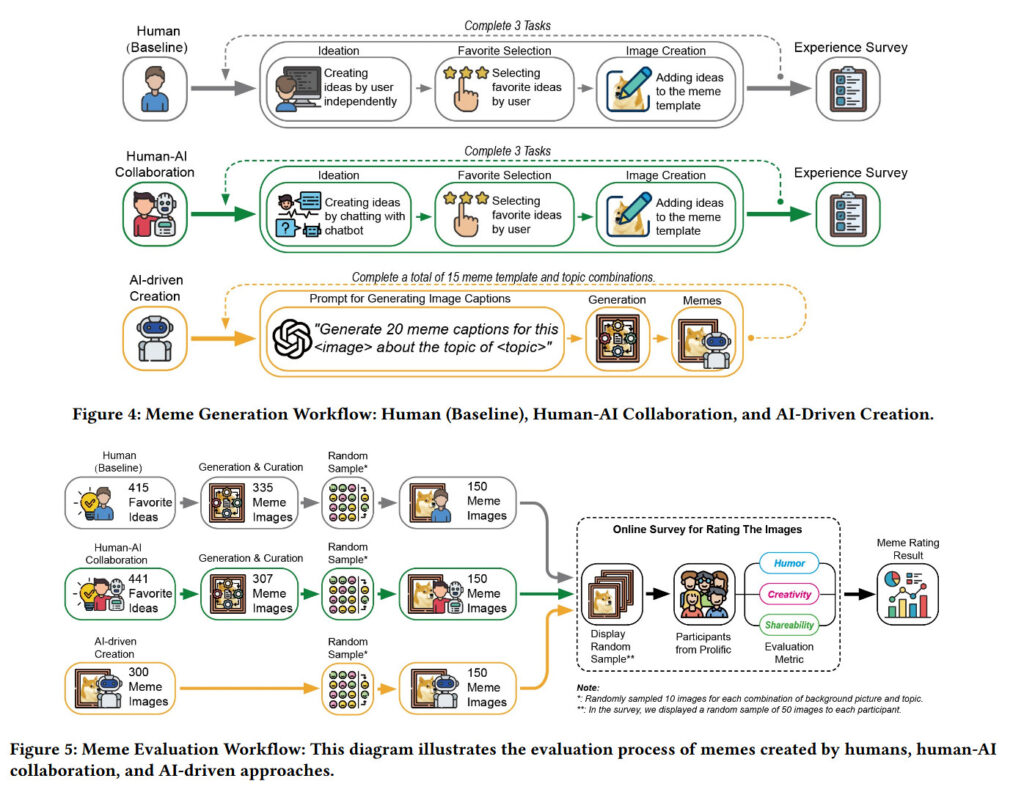

The international research team from KTH Royal Institute of Technology in Sweden, LMU Munich in Germany, and TU Darmstadt in Germany set up three test scenarios comparing meme-creation quality. They pitted humans working alone against humans collaborating with large language models (LLMs), specifically OpenAI's GPT-4o, and memes generated entirely by GPT-4o without human input.

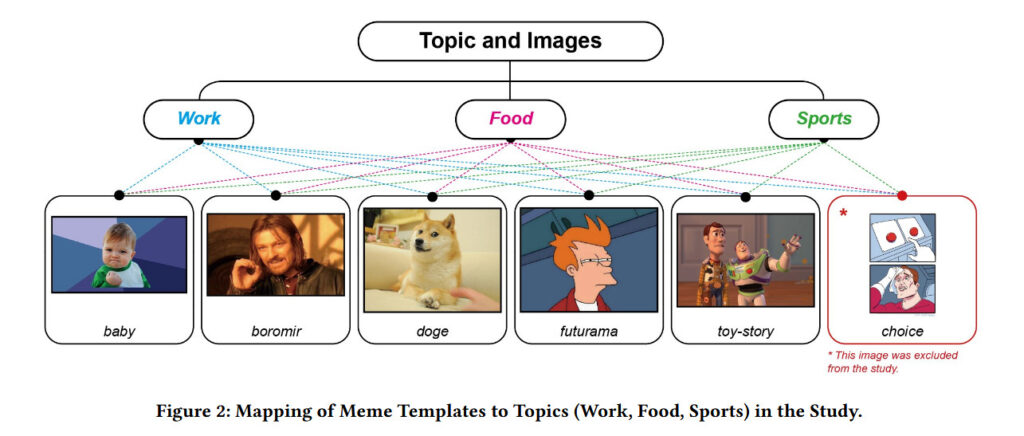

The researchers tested meme captions across three relatable categories (work, food, and sports) to explore how well AI and humans handled humor in familiar contexts. They found notable differences in performance across these categories—for example, memes about work tended to be rated higher for humor and shareability than those about food or sports—highlighting how context can influence the effectiveness of meme humor, whether created by humans or AI.

Loading comments...

Loading comments...